by Faisal Hoque, Pranay Sanklecha, Paul Scade

The US government has published a blueprint for maintaining American dominance in the age of AI. Here’s how global firms can respond while managing opportunities and risks.

Artificial intelligence is reshaping the competitive landscape, and governments are racing to position their economies for leadership. In the United States, the recently announced AI Action Plan signals a decisive shift in policy, favoring deregulation and rapid innovation over precaution and control.

Winning the Race: America’s AI Action Plan marks an important shift in both policy and philosophy. Rather than the government coordinating and safeguarding AI development – as under previous US administrations and as continues to be the case in most other countries – the plan sets out an approach that emphasizes deregulation, private-sector development, and a “try-first” mentality.

In pursuit of this goal, it outlines dozens of federal policy actions distributed across three pillars:

- Accelerate AI innovation

- Build American AI infrastructure

- Lead in international AI diplomacy and security

While the plan still includes room for national standards and approaches to evaluating AI tech and usage, such as NIST’s AI Risk Management Framework, the underlying philosophy is striking in its openness. Its goal is to “dismantle regulatory barriers” and support faster and more far-reaching AI innovation than was previously possible.

For multinationals operating in, or seeking to operate in, the United States, these principles create both opportunities and risks.

On the one hand, a minimalist, light-touch regulatory environment will enable businesses to test minimum viable products (MVPs), implement new tools, and bring new products to market more rapidly than ever before. At the same time, with fewer prescriptive federal guardrails, there will be a heightened risk that flawed algorithms or systems that are rushed into production might fail to meet consumer needs or may even act directly counter to their interests. Such outcomes carry significant ethical and brand risks for the company responsible.

As outlined in an I by IMD article ‘From consumers to code: America’s audacious AI export move’, senior leaders cannot afford to ignore the new environment created by the Action Plan, if only because competitors will move quickly to exploit it. Effective engagement requires a systematic approach that works carefully to make the most of the full range of opportunities the plan offers while simultaneously minimizing the risks involved.

Responding effectively to the AI Action Plan requires a dual mindset that encompasses both radical optimism and deep caution.

Dual mindsets: radical optimism and deep caution

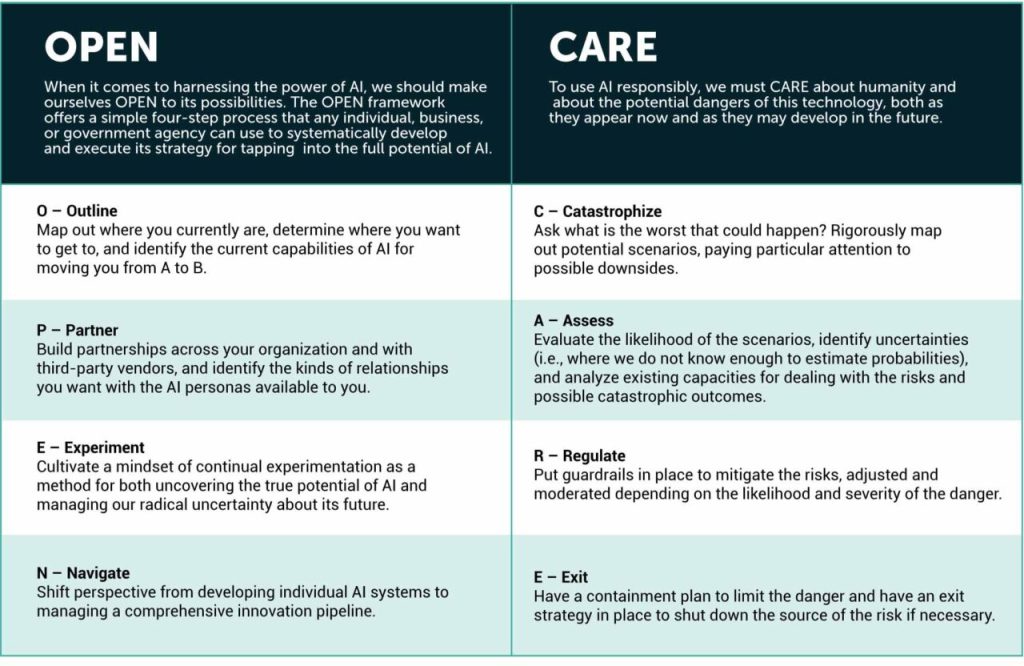

Responding effectively to the AI Action Plan requires a dual mindset that encompasses both radical optimism and deep caution. In our recent book TRANSCEND , and an accompanying article in Harvard Business Review, we set out two complementary frameworks designed to help companies operationalize this dual mindset while thinking systematically about how to implement AI-driven transformation.

The OPEN framework – Outline, Partner, Experiment, Navigate – helps companies fully harness AI’s enormous potential, while the CARE framework – Catastrophize, Assess, Regulate, Exit – ensures they effectively manage AI’s equally enormous risks.

These frameworks can provide valuable scaffolding for developing potential approaches for implementing AI in any environment. But four components – Partner, Experiment, Catastrophize, and Exit – offer particular value when responding to the US AI Action Plan.

Partner

In the OPEN framework, the Partner stage offers a tool for choosing and shaping relationships so you can bridge resource and knowledge gaps – whether what’s missing is , data, or hard-won know-how. The goal here is to narrow the gap between ambition and capability. In the context of America’s AI Action Plan, progress will often run through partnerships. These include conceptual partnerships between government and the private sector – with government coordinating priorities and standards while firms do the building – and practical collaboration among companies to use common open-weight AI stacks across the supply chain, consistent with the plan’s push for interoperability.

Partnerships also make you more resilient. The plan emphasizes the importance of standards, evaluation, and secure supply chains. Working with the groups shaping those norms will help ensure that your compliance evidence is reusable across business units, boards, and regulators. Understanding future export-control expectations can also reduce the chances that critical components or export paths become unavailable.

- Co-develop evaluation. Join NIST/CAISI convenings in your domain; adopt shared test suites, failure modes, and incident reporting formats so results are easily shared within your business and with regulators.

- De-risk the supply chain. Build an export-control-aware vendor map; require attestations on chip origin, model provenance, and data lineage, with audit and step-down clauses if a partner’s status changes.

- Encourage open-weight AI stack adoption. Partner with SMEs in your network to jointly adopt the same open-weight models.

Aim to make customers feel like you are experimenting alongside them, not on them.

Experiment

In OPEN, Experiment means running small, real-world trials to answer practical questions, such as “What value does this create?” “What are the risks and costs?” and “What would it take to run it at scale?” The aim is to learn quickly and inexpensively on the way to making an actionable decision about whether to take a program further or kill it. In the regulatory environment created by America’s AI Action Plan, the US market will provide uniquely beneficial conditions for effectively running “AI labs in the wild.” It will be possible to put new features in front of customers more rapidly and with fewer restrictions, dramatically shortening the path from proof-of-concept to working products.

- Design reversible trials. Ship production-adjacent pilots with a small footprint. Decide in advance which results will signal “scale,” “fix,” or “stop.”

- Build with the customer. Co-design pilots with customers and schedule fast feedback cycles so changes happen rapidly, and users see progress. Aim to make customers feel like you are experimenting alongside them, not on them.

Catastrophize

In the CARE framework, Catastrophize means identifying the worst ways an AI system could plausibly fail so that it is possible to prepare for the risk and avoid or mitigate it. With the light-touch, try-first environment envisioned in America’s Action Plan, the responsibility for catastrophizing shifts decisively to businesses. As the government limits its regulatory requirements, businesses need to take up the slack by becoming the primary custodians of responsible AI implementation.

This is not just an ethical obligation. It is also sound business practice. A proactive approach to identifying risks and defining what levels are acceptable and what are not means leaders can approve appropriate plans with greater speed and confidence.

- Run pre-mortems and red-team sprints. List concrete harm hypotheses, stress-test with realistic inputs and adversarial prompts, estimate potential impact, and record explicit stop/rollback triggers.

- Set risk appetite and binding guardrails. Define unacceptable uses, threshold metrics (e.g., error rates, bias, safety), and required human-in-the-loop points. Publish them where teams will see them.

Think of the Exit step as the development of a three-part architecture, with technical, reputational, and legal layers.

Exit

In CARE, Exit means determining well in advance of need precisely under what conditions and how you will stop or unwind an AI implementation. While the AI Action Plan includes a range of guardrails and standards, it leaves control over the exit process almost entirely in the hands of businesses. Pre-defined exit plans shorten crises, limit harm, and preserve value, so it is important to treat them as part of the design, not an afterthought.

Think of the Exit step as the development of a three-part architecture, with technical, reputational, and legal layers. The goal is simple: if an AI system misfires, or if public sentiment requires an implementation to come to an end, your teams must know who pulls the plug, what gets rolled back, and how fast normal service resumes.

- Layer 1: Technical resilience. Build every system with a parallel process that can immediately take over if the AI component fails. If your AI-driven supply chain optimization goes haywire, can you revert to human decision-making within hours, rather than days?

- Layer 2: Reputational firebreaks. Keep experiments at arm’s length from the core brand: use “lab” labels, limited cohorts, and explicit opt-ins. Prepare customer comms, refund/credit policies, and de-publishing steps in advance.

- Layer 3: Legal isolation. Separate high-risk trials in distinct entities where appropriate. Update Terms & Conditions to flag experimental status and data use, add audit trails to show reasonable care, and secure insurance that names AI failure modes (e.g., model error, IP/data leakage). Include supplier obligations in contracts, such as prompt breach notice, quarantine, artifact sharing, and cooperation during wind-down.

America’s AI Action Plan represents a watershed moment for global multinationals, offering unprecedented freedom to innovate while demanding equally robust responsibility in implementation. Success in this new landscape requires that companies move beyond traditional risk-reward calculations and embrace a sophisticated dual approach – pursuing transformative opportunities while vigilantly managing risks.

By adopting these complementary twin tracks while focusing on strategic partnerships, rapid experimentation, proactive risk identification, and clear exit strategies, multinationals can position themselves not just to navigate the AI revolution but to help shape its trajectory.

Original article @ IMD.