by Faisal Hoque, Pranay Sanklecha, Paul Scade

As AI races ahead and regulators fall behind, the real question isn’t what your system can do, but what kind of organization you become by deploying it. Answering these four questions will help you ensure you implement responsible AI (RAI).

As AI systems become more powerful and pervasive across critical domains, incidents of harm to humans are increasing in frequency and severity. MIT’s AI Incident Tracker reports a 47% increase in the number of reported incidents between 2023 and 2024, while Stanford’s most recent AI Index Report puts the increase at 56.4%. The annual increase rises to 74% for incidents in the most severe category of harm, according to MIT.

This development puts business leaders in a difficult position. Historically, companies have relied on government oversight for the guardrails that minimize potential harms from new technologies and business activities. Yet governments currently lack the appetite, expertise, or frameworks to regulate AI implementation at the granular level. Increasingly, the responsibility for deploying responsible AI (RAI) systems falls to the businesses themselves.

Strategically implemented responsible AI (RAI) offers organizations a way to mitigate and manage the risks associated with this technology. The potential benefits are considerable. According to a collaborative Stanford-Accenture survey of C-suite executives across 1,000 companies, businesses expect that adoption of RAI will increase revenues by an average of 18%. Similarly, a 2024 McKinsey survey of senior executives found that more than four in 10 (42%) respondents reported improved business operations and nearly three in 10 (28%) reported improved business outcomes as a result of beginning to operationalize RAI.

Yet while leaders increasingly recognize the importance and potential benefits of RAI, many organizations struggle to take effective steps to put in place a governance structure for it. A key reason for the gap between aspiration and action is that few businesses have the internal resources needed to navigate the complex philosophical territory involved in ensuring that AI is implemented in a truly responsible manner.

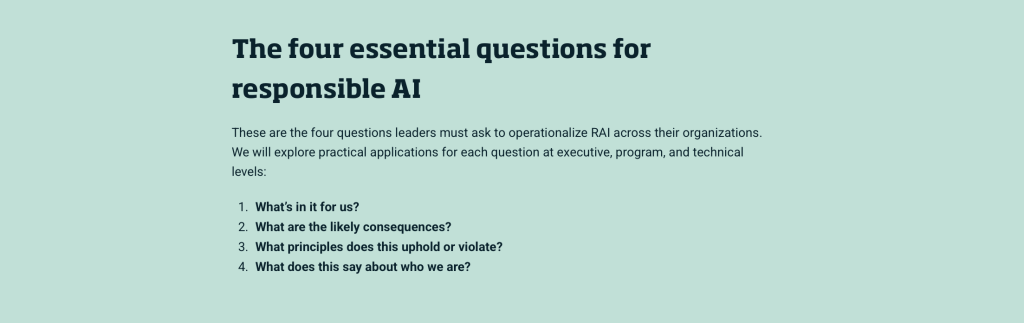

The approach consists of questions rather than prescriptive answers

A practical guide for operationalizing responsible AI

To bridge the gap between aspiration and operationalization, we offer a simple approach that’s practical and immediately actionable. Crucially, it consists of questions rather than prescriptive answers. By framing RAI in terms of a series of questions rather than a set of fixed rules to be applied, we provide a flexible yet structured approach that is not only sensitive to context but can also evolve alongside the technology itself.

The questions we identify are not arbitrarily chosen. Rather, they are distilled from established philosophical traditions across cultures, including Western ethical frameworks, Confucianism, and Nyaya ethics. They represent different but complementary perspectives on what responsibility means in practice.

For RAI to be truly effective, it must cascade from the boardroom to the project office. This requires a structured approach across three critical tiers: executive oversight, program management, and technical implementation. By consistently applying the four questions at each level, organizations can create alignment and ensure that RAI is embedded as a practical component in all AI development and deployment initiatives.

Question 1: What’s in it for us?

Organizations must pursue their self-interest as part of their duty to their shareholders. When considering an AI initiative, it is therefore essential for leaders to ask: What’s in it for us?

This question needs to be answered with nuance, using the concept of enlightened self-interest, a philosophical perspective dating back to ancient thinkers such as Epicurus and developed by economists like Adam Smith. Enlightened self-interest recognizes that pursuing one’s interests intelligently requires considering long-term consequences and interdependencies rather than focusing solely on immediate gains.

How you can use the question:

Executive level: Incorporate RAI metrics into board reports to show how responsible practices correlate with long-term value. Establish dedicated RAI committees to align initiatives with strategic plans.

Program level: Require a one-page “RAI value scorecard” as part of every AI business case. Include RAI implementation as a formal success metric alongside traditional KPIs.

Technical level: Track unresolved ethical issues the same way you track technical debt. Build features into AI systems that can explain their decisions – this helps with both problem-solving and regulatory compliance

Drawing from the consequentialist ideas championed in Nyaya ethics and by Western philosophers like John Stuart Mill and Jeremy Bentham, this perspective requires leaders to divorce intentions from outcomes and focus on judging the latter alone.

Question 2: What are the likely consequences?

Implementing RAI demands that leaders consider impacts on all stakeholders: employees, customers, communities, and society at large, in addition to shareholders. By asking, “What are the likely consequences?” at key planning and implementation stages, organizations can better anticipate risks and take meaningful steps to prevent them. Drawing from the consequentialist ideas championed in Nyaya ethics and by Western philosophers like John Stuart Mill and Jeremy Bentham, this perspective requires leaders to divorce intentions from outcomes and focus on judging the latter alone.

How you can use the question:

Executive level: Hold quarterly scenario planning sessions on the societal impacts of flagship AI systems. Commission independent audits of the downstream effects of high-visibility models.

Program level: Schedule “impact reviews” at each major milestone to map affected stakeholders. Use a standardized “consequence-severity framework” to prioritize mitigation resources.

Technical level: Embed automated fairness and safety tests in the continuous integration/continuous deployment (CI/CD) pipeline. Implement enhanced monitoring for AI systems with high-consequence potential.

It compels organizations to identify and articulate their guiding principles explicitly, helping leaders make intentional and principle-driven decisions around technological innovation.

Question 3: What principles does this uphold or violate?

When leaders systematically ask of their AI initiatives, “What principles does this uphold or violate?” they ensure that AI development and deployment remain anchored in ethical foundations.

This question draws from the deontological ethics associated with Immanuel Kant, which emphasizes that certain actions are inherently right or wrong regardless of their consequences. It compels organizations to identify and articulate their guiding principles explicitly, helping leaders make intentional and principle-driven decisions around technological innovation.

How you can use the question:

Executive level: Run a formal “principle alignment” review before giving the go-ahead to any AI initiative. Publish a brief annual account of how deployed models map to the organization’s ethical commitments.

Program level: Add “principle alignment” as a gate in stage-gate reviews. Provide project teams with principle-specific design requirements for all AI project plans.

Technical level: Map technical specifications directly to organizational principles. Run principle-focused design reviews and document how choices honor stated values.

The company recognized its responsibility toward its employees and found a way to harness AI’s efficiency benefits while still providing meaningful work.

Question 4: What does this say about who we are?

When IKEA began investing heavily in AI to replace their call center staff, they didn’t just fire those employees. Instead, they invested in upskilling them for more sustainable positions. The company recognized its responsibility toward its employees and found a way to harness AI’s efficiency benefits while still providing meaningful work. In doing so, IKEA expressed something profound about its culture and values, and ultimately about its identity as an organization.

When leaders ask, “What does this say about who we are?” they are forced to consider what kind of organization they aspire to lead and how their values are expressed through their AI initiatives. This question transforms AI implementation from a purely technical exercise into a moment for organizational reflection and affirmation of purpose.

How you can use the question:

Executive level: Assess major AI initiatives through the lens of organizational identity. Ask, “Is this who we want to be?”

Program level: Insert brief “values check-ins” in sprint reviews. Include RAI capability-building goals in team development plans.

Technical level: Design human-in-the-loop mechanisms for high-stakes decisions. Prioritize explainability features that demonstrate a commitment to transparency.

Conclusion

Turning the high-flown rhetoric of responsible AI into operational reality requires deliberate integration at all levels of the organization. By asking the same questions at three crucial organizational tiers, companies can systematically ensure this integration. Further, companies can do this while retaining the virtues of flexibility and context-sensitivity; while the four questions provide a consistent scaffolding, they must be adapted to accommodate different responsibilities and contexts across the organization.